Avoiding the Floaty Chair Future

Like many engineers these days, I’ve started using GenAI tools like ChatGPT to help with technical tasks. But a recent experiment where I relied entirely on ChatGPT to debug an issue made me pause and reflect on what we might be giving up in the problem-solving process.

A few months ago, I was writing some Terraform code and ran into an error. So, I decided to run a quick experiment: what happens if I rely solely on ChatGPT to help diagnose and resolve the problem with minimal human input?

I copied the error message from the console and pasted it into ChatGPT. The response included a detailed breakdown of what the error meant, along with several potential fixes—kicking off a repetitive loop. Every five minutes, I was doing the same thing: copy the error, paste it into ChatGPT, try the first fix, repeat.

Here’s what that loop looked like:

After several rounds of this, I eventually landed on a working solution about 30 minutes later.

But afterward, it made me ask myself:

What did I actually learn?

In short: nothing.

I didn’t need to understand the problem. GenAI made the decisions for me—what code to write, what format to use, which fix to apply. It handled everything.

The Difference Between Thinking and Copy-Pasting

That got me thinking: how does this GenAI-centric problem-solving approach compare to my usual workflow?

Normally, when I hit an error, I read through the message carefully, line by line. I search online. I might land on Stack Overflow, browse related issues, dig into documentation, and test different ideas. I take notes, try things out, and gradually piece together a deeper understanding of the system I’m working with.

In this experiment, using ChatGPT robbed me of that process—the critical thinking and decision-making involved in constant experimentation that comes with trial-and-error learning.

Are We Outsourcing Too Much?

That realization made me zoom out even further and think about how often we can let AI make decisions for us—sometimes without even noticing.

We often use AI from morning to night. For example:

- Choosing the optimal route to work

- Recommending what shows to watch

- Planning workouts

- Helping us choose which laptop to buy

So what comes next? Letting AI decide when to wake up? Who to talk to? What to eat or drink?

This is what I call the “floaty chair lifestyle”—a world where AI handles everything, and humans stop thinking for themselves.

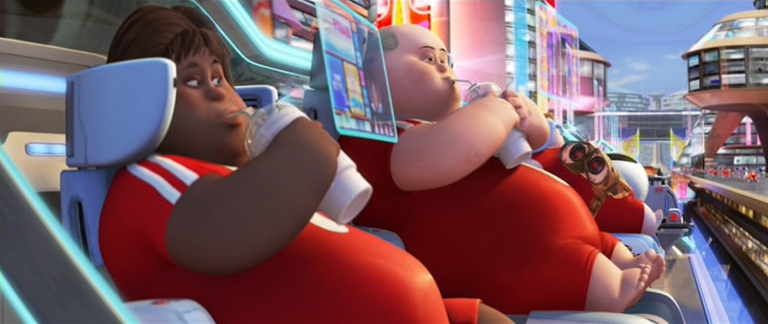

Sounds familiar? - It’s basically the plot of WALL-E, where people exist, but don’t live. All they do is lie back in floating chairs while an AI runs their entire world on the ship they live on.

The Fix: AI Thinking With Me, Not For Me

To avoid ending up in that floaty chair future, I’ve adopted a simple but powerful technique to stay sharp: rubber duck debugging.

If you haven’t heard of it, rubber duck debugging is a method where an engineer explains a problem out loud—often to a literal rubber duck. The simple act of explaining the issue out loud can help you spot the error or understand the logic more clearly.

I use the same principle—but with GenAI. The difference is in how I prompt it:

“You must act as my rubber duck. Let me explain my problem to you step by step. Don’t immediately solve it—just ask clarifying questions and highlight any gaps or mistakes in my thinking.”

A prompt like that transforms ChatGPT from a solution machine into a thinking partner. It keeps me in the experimentation loop, instead of outsourcing all the work.

Other Example Prompts for Rubber Duck Debugging with GenAI

Cooking scenario (planning a meal with leftovers):

“You’re my rubber duck. I want to figure out what meal I can make with these leftovers: roast chicken, sweetcorn, and rice. I’ll walk through my thought process. Don’t suggest a recipe—but ask questions about flaws or missing steps in my thinking.”

Technical scenario (Terraform debugging):

“You’re my rubber duck. I’m working with Terraform and getting an error related to a provider block in my module. I think it might be due to version mismatches, but I’m not sure. I’ll walk through my assumptions and steps—please ask questions if anything doesn’t add up or if there are blind spots. Do not provide a solution.”

Final Thoughts

I don’t want to end up in the floaty chairs like the people in WALL-E. That means I need to keep experimentation, reflection, and curiosity at the center of how I solve problems.

At the same time, I can’t ignore the incredible value of AI tools.

So, I’ve made a decision:

I’ll use AI to think with me and not think for me.